I’m glad the semester (and study year) is over, but also very appreciative that it’s been one of my most creatively rich stretches in the past few years.

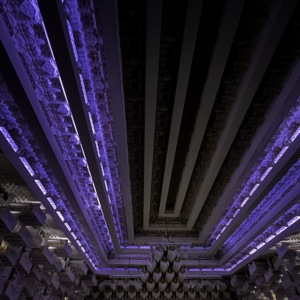

Capitol

The “final” iteration is looking about as good as it can without me being present in the venue to note any further tweaks I’d like to implement. I was happy to receive some positive feedback, mostly because people understood what I was aiming for. In particular, a comment about the ending feeling like an out of body experience with everything coming together after the slight blurring of the staccato patterns in the buildup, followed by the sudden cut being a somewhat alarming jolt back to reality was pretty much spot on in terms of what I was going for. Other comments such as the feeling of it being something one could get lost in, was definitely something I was aiming for. I think if I end up extending this, or developing something similar, I may go in the direction of building something that is designed to be experienced with eyes closed. I think that’d work incredibly well at Capitol. I know it’s been done for a Dark Mofo work, but it’d be interesting to do it for something with a more positive emotion.

What didn’t work

Pretty early on, I decided to move away from the idea to give it movements, or a very dynamic arc, from suggestions given in class. I did attempt this in one early version, but it would have felt disjointed.

Also early on, the decision to move away from using metal guitars, as well as clean, Raster Noton style minimalist sound design, was, mostly in terms of keeping things simple, a good idea. I’m still convinced that I can create a hypnotising metal work, but that’s for a future project. I also decided against any tempo automation, since it would have been hard to keep track of with multiple layers and the methods I used to generate sounds; however, towards the end of the piece, there are some polyrhythmic elements coming in, briefly, which add to the “blurring” I mentioned earlier.

My experience with Pharos Designer definitely didn’t quite work out the way I expected it. I had an idea to play with colour in a way that was similar to chromatic aberration, but that was scrapped before I even started trying to get things together in Pharos, as I don’t think the effect would have worked in the space. My initial experiments with manually controlling lights were quite tedious to program, and I largely abandoned manual control in favour of slightly less precise, preset-based control.

What worked

From the beginning of my sound experiments, I knew that loading recordings into Emission Control 2 would be the way to go for the work. It was essentially the reason why I quickly decided against using guitars or more minimal tones, as neither sounded good when processed using the software. Essentially, the piece is made up of many different Korg Wavestation presets, all playing the same very slow chord progression, processed using Emission Control, which was sometimes set to oscillate between different recordings on the fly. I spent almost an entire week recording various versions, including using different repetition intervals, varying levels of randomness (well, not really randomness; it was more like several LFOs cascading over each other to make odd patterns), and different sound sources. There are over 20 different layers in the project, which all fade in and out slowly.

Once I started getting used to the idiosyncrasies of Pharos Designer, it became a little easier to manage. As mentioned above, I quickly learned that it’s ok to use the presets, rather than trying to create everything from scratch myself; it meant that I could adjust things like timing a lot easier.

The idea shown in my initial presentation, of ambiguous movement, worked out quite well, as can be seen on the ceiling from around 6:30 onwards, where each strip of lights is flashing at a different rate, which means that at certain points, the lights are moving towards the centre, and others away from the centre, or even both at once, which I thought was one of the key hypnotic moments of the design.

Future plans

I would like to create a long-form work for the Capitol, perhaps something approaching an hour in duration, but will need to think about how to structure it in terms of how to keep the audience interested. Perhaps something with long stretches in similar style to my hypnotising work, but with some movements where things get a bit more complex or even brutal. Also, I’d love to use the screen as well, even for simple colour washes, to activate the architecture in a way that the lighting doesn’t; using flashes between the screen and lighting to give the illusion of the architecture moving would be highly effective.

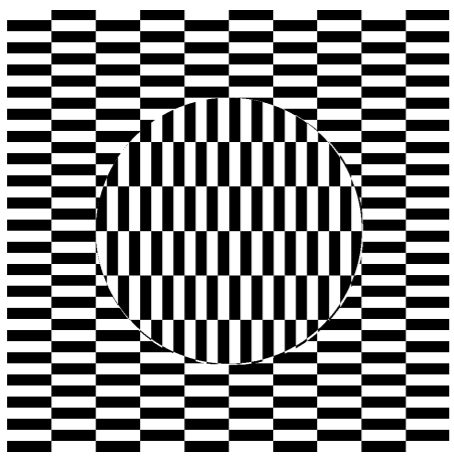

Phase

There’s not much more to write about this, because it’s been in a finished state for a couple of weeks now, but it has been an interesting exercise in restraint.

What didn’t work

I’m still a little conflicted about the way it should be presented; part of me enjoys the ambiguity of the circles being invisible until they hit the base of each square, and then silhouetted by the flash, but the other part agrees with comments about that idea diminishing the hypnotic experience by removing or reducing the sense of it being a kinetic sculpture captured in a browser.

Aside from stylistic decisions, there are still some issues I’d like to address in terms of movement, or sound. Sound-wise, there are some occasional glitches which may be fixed by limiting the amount of active squares to a smaller number, like 8. The movement issues mostly arise when switching browser tabs; I don’t know if there’s any way to fix this, but I’d have to run some tests.

What worked

The decision to cut down from a set of three browser-based works to two was very good for my mental health, and allowed me to focus on one while still having time to work on other projects (and have some downtime as well). I’ve still got the other two works in various stages of completeness, and will continue to work on them in the future.

I’m pretty happy that Phase ended up being very close to what I proposed in my initial presentation, which means I’m getting a lot better at coming up with ideas that I can actually realise.

Future plans

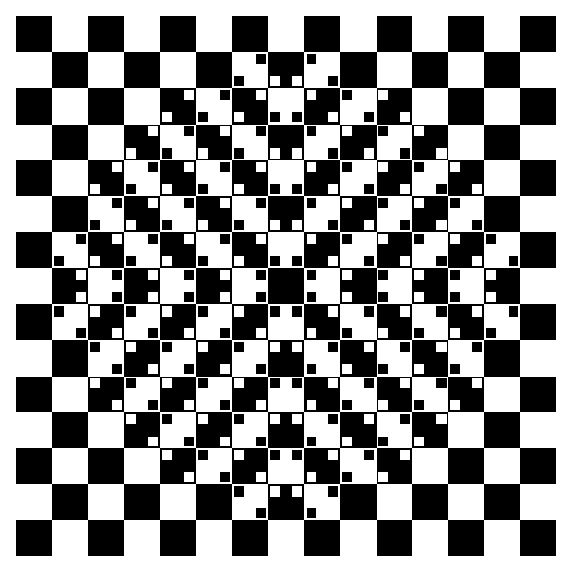

My browser-based works are an ongoing project, as they’re often quite good coding exercises for me, and have allowed me to develop a somewhat consistent style. I’d love to, at some point, consolidate them all as levels, or mini-games within a larger, possibly platform game, where each of the individual pieces is a puzzle that must be solved by playing a certain melody or interacting in a certain way before the player can progress.

Another implementation is for these works to be translated into physical space, using projection mapping, gestural control, audio reactivity, and multichannel sound. I can imagine a set of installations in subdivided spaces which can be accessed individually.

Collaboration – Capitol

I took in the minor changes I mentioned last week, as well as removing the ending (sadly, as I thought it provided some nice closure to the piece—however, it’s true that it was a step down in terms of intensity compared to the previous section), and, while there were a million things I thought about that I could adjust slightly, I had to make the call to consider it finished.

What didn’t work

There were so many iterations of the main chord progression, all of which just sounded too cheesy, possibly only to me, but cheesy nonetheless. This was actually the part I spent the most time on.

I still don’t think I quite nailed the Iglooghost / Woulg vibes I was going for; I think I need to study their work a lot more in order to figure out what they’re doing and why it resonates with me.. and then figure out how to write music inspired by them without wearing my influences on my sleeve.

What worked

Pat’s positive reaction to my experimental ideas allowed me to push this piece pretty far. I was a little cautious of having four sections that seemed to have no relation to each other, but Pat was really into the idea and inspired me to work on getting them to transition into each other a bit more smoothly.

Collaboration – Lightning

I wrote a lot about this last week, but since then, I added the elements I mentioned (scrunching paper through FFT effects, and guitar lead buzzing), which ended up working a lot better than I expected. There’s a definite arc to the piece, highlighting each element sometimes solo, and when the buzzing and scrunching come in, everything else is reduced greatly in volume, giving a feeling of the system breaking somewhat, before everything coming back in stronger than before for the bright ending.

I’m actually incredibly happy with how this turned out, and there were no ideas that I tried that didn’t work; in fact, the whole piece was, as mentioned, built on a “happy accident” that emerged through viewing the initial sculpture video while working on another piece of audio (for a different project). From there, the project just kept building as I went back and forth with Pat, and at one point I was so excited about it that I made three iterations in one day.

Overall reflection

It’s a shame I didn’t get to work on some of the more ambitious ideas that I had, including the Grand Organ, but that may not have worked out the way I expected anyway. I’ll have to see if I can hook it up in the future though.

One other thing I wanted to explore was multichannel audio, but again, due to restrictions I don’t think I would have been able to deliver a work that I would have been satisfied with. Again, it’s something I’d like to explore in the near future.

I acknowledge that my research has been a little less in-depth than it could have been, and if I do want to continue into further HE study I’ll have to step it up. From this course, however, I’ve collected a huge list of research opportunities which I’m keen to get into, which includes everything from psychological effects of rhythm, to further study in the field of mathematics and physics, both of which I can apply to my browser-based (and eventually standalone/installation-based) works.